Presentation: Data Mesh Paradigm Shift in Data Platform Architecture

This presentation is now available to view on InfoQ.com

Watch video with transcriptWhat You’ll Learn

- Hear about the Data Mesh Paradigm, what it means and how it may affect data systems.

- Find out what are the main constructs of the Data Mesh Paradigm.

Abstract

Many enterprises are investing in their next generation data platform, with the hope of democratizing data at scale to provide business insights and ultimately make automated intelligent decisions. Data platforms based on the data lake architecture have common failure modes that lead to unfulfilled promises at scale.

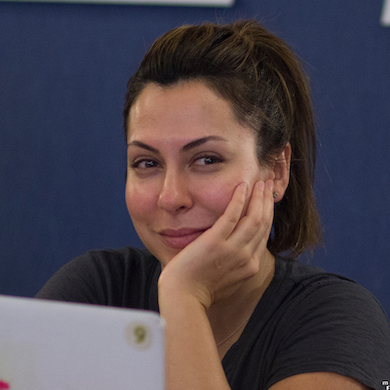

In this talk Zhamak shares her observations on the failure modes of a centralized paradigm of a data lake, or its predecessor data warehouse.

She introduces Data Mesh, the next generation data platforms, that shifts to a paradigm that draws from modern distributed architecture: considering domains as the first class concern, applying platform thinking to create self-serve data infrastructure, and treating data as a product.

Tell us about your work.

I've been in the industry starting as a software engineer, practitioner 20+ years ago now, and I've stayed very close to technology and curious about all different aspects of everything from hardware development, network protocol architecture to enterprise architecture, microservices and for the last two years focusing on data at scale. Data platforms and data architecture at scale, hoping to bring my experience in operational systems at scale to the world of data.

What does "Data mesh paradigm shift in data architecture" means?

It's a long title. I'm sure we can cut a few words out of the title. When I came to the world of data a couple of years ago, looking at large enterprises that ha've been trying to harness their data, getting their arms around it, to be able to utilize it for a variety of consumption cases, everything from machine learning to becoming a data driven or AI-first type of company or doing business analytics and business intelligence reports or just feeding their applications. What I noticed the architecture and the practices and principles are heavily influenced by the data warehousing principles of 30 years ago. There's still this widely accepted assumption and paradigm that assumes that the data has to be centralized in one place and that would that leads to coding, through Conway's law, is centralized and owned by one team before they can use it. I'm going to try to convince the audience to challenge that assumption and status quo. There is a wonderful book in 1962 by American physicist Thomas Koon that talks about how a paradigm shift happens. He coined the term paradigm shift how scientists progress through science. You start with some assumptions, existing paradigm and theories and constructs, and you try to improve it. And then at some point, you come to this phase of crisis where the problems that you try to solve cannot be answered with the solutions of the existing paradigm. And that's where the shift happens. You think out of the box and think about the problem very differently. In science, examples of subatomic observations led us to have that paradigm shift from Newtonian mechanics to quantum mechanics.

I hope that I can do the same thing and create a paradigm shift in the fundamental constructs and assumptions of how data needs to be managed to satisfy both consumption of ubiquitous data that is available within the organization, across hundreds of systems or beyond the bounds of organization, and also making use of it in a very diverse set of consumption models.

That paradigm shift, I call it data mesh. I have to say I wasn't very creative in giving a name, but it is what it is. And it's fundamentally, showing at the principal level a contrast to the existing principles underpinning a lot of data platform architectures and then in practice showing what are what are the components of this new architecture and how to go about building them.

Would you talk more about not having the big data warehouse in the sky?

I like to embrace a few first principles and then we can go from there. The first principle is that if you go to the moon and look back at your data platform architecture on Earth, what you end up seeing is this bounded contexts of different domain data sets that encapsulates access to data in a polyglot form. But the data itself is bounded around the domain where it has a particular lifecycle, it has an independent lifecycle to the other domains. We have to scale out any architecture, we have to find an axis along which we are decomposing our architecture. I argue that that first class architectural component for distribution and decentralization should be the data itself and around that particular domain, and the data domain might be very much aligned to the point of origin where they come from. In healthcare would be claims data. It would be your clinical visits, it would be your lab results, domains are very much aligned to the point that originated from.

Then you might have also domains that are later on aggregated on top of the source data. In healthcare records of patients is this holy grail of domain data in a way that shows that aggregations of all your biomarkers and medical history and all those lab results we do twice a year, keeping that over time. That's another domain, but it's a projected aggregated domain. So the first principle is that we're going to break up the ownership of the data and our architecture around a polyglot access to this domain constructs of what I call the data products right now. So right now, if we zoom out to the moon and look back at our data, what we see is pipelines. Pipelines are the first pass concern.

And we try to decompose them to scale out by accessing the data through pipelines. I know really famous large tech companies here in Silicon Valley, their analytics teams decompose like that across the boundaries of pipeline. That's one principle, the pipeline becomes a second class concern and an implementation detail of these data products because they will have a pipeline implementation, they still have to ingest data and process it and serve it.

The second main principle. OK, now I've distributed my data, which is against everything we have believed for 30 years because all of the executives have dreamed of centralizing data and we're saying, no, no, don't do that, decentralize it. So once you decentralized this data, how do you make sure that the data is still accessible? You're not going to end up into the silo situation that you're in today with hundreds of databases running around your organization making it so difficult to access them. Then the second principle is around treating these analytical data sets as products. The reason I say products, is that finding, using and make sense of it should be a great experience. The data product ownership is another concept that I hope we can embrace with the data mesh decentralization that these domains have their own data product owners that have a vision for the lifecycle of this data, how it will evolve, make sure their schemas are established, make sure the documentations are in place, make sure that there is interoperability for their data sets. The product ownership is the second construct, and then the third construct. When we distribute technology around domains, we did that with microservices. And there is an analogy of this distribution and microservices. We said, every domain will own their own full stack. You have full autonomy. Go build up from bare metal up all the stack that you need. And then we realized that's just crazy because the amount of overhead and repeated metal work that each of these domains need to do. And then we swung back and said, OK, we're going to extract all of these cross cutting concerns of technology overhead into this self serve data infrastructure layer.

So the third principle is that for any domain to provide their analytical data as events, how can we abstract away all the middleware work that goes into building the infrastructure so that I can access the data with proper access control. So I don't have to worry about managing my own Spark cluster. I don't have to worry about managing my storage accounts. So then abstracting that away into this, what I call self-service data infrastructure. And that's another piece of the puzzle. And the final piece of the puzzle is the governance. For healthcare data, you have members information in one place, their claims in another place, the lab results in another place. And usually this operational systems that that data come from, they have their own internal identifiers and so on.For these systems, to be able to join or correlate nicely, we have to have some sort of standardization in place to map those internal IDs to some global identifiers. That's another aspect, putting standardization in place so that we can have an ecosystem effect by merging the data.

You're targeting data engineers, architects and to some degree technical leadership when it comes to the standardization and governance of data architecture.

Absolutely. I think first and foremost technical decision makers, people either a CIO and CTO, can sit in this talk and take away something and not be lost in the technical details. Or you can be a data engineer and sit in this talk and hopefully some of your existing mental model might get a little bit challenged and think about structuring your work differently and solving a different kind of problem or looking at solving a problem from a different angle, but always do something maybe a little bit controversial here.

What I'm hoping to get, maybe not the next day after this talk, but in a year time, the data engineer as a siloed practice hopefully has gone away. Hopefully our generalist system engineers have the data engineering toolkits in their toolbelt.

Similar Talks

License Compliance for Your Container Supply Chain

Open Source Engineer @VMware

Nisha Kumar

Observability in the SSC: Seeing Into Your Build System

Engineer @honeycombio

Ben Hartshorne

Evolution of Edge @Netflix

Engineering Leader @Netflix

Vasily Vlasov

Mistakes and Discoveries While Cultivating Ownership

Engineering Manager @Netflix in Cloud Infrastructure

Aaron Blohowiak

Optimizing Yourself: Neurodiversity in Tech

Consultant @Microsoft

Elizabeth Schneider

Monitoring and Tracing @Netflix Streaming Data Infrastructure

Architect & Engineer in Real Time Data Infrastructure Team @Netflix

Allen Wang

Coding without Complexity

CEO/Cofounder @darklang

Ellen Chisa

Holistic EdTech & Diversity

Holistic Tech Coach @unlockacademy

Antoine Patton

Exploiting Common iOS Apps’ Vulnerabilities

Software Engineer @Google